250x250

Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 |

Tags

- 코딩

- 주가예측

- 재귀함수

- 기초

- 크롤링

- 흐름도

- 머신러닝

- 템플릿

- 프로그래머스

- 코딩테스트

- 연습

- 추천시스템

- CLI

- 가격맞히기

- 알고리즘

- 주식매매

- 주식

- 회귀

- 주식연습

- DeepLearning

- 딥러닝

- PyTorch

- Linear

- API

- 선형회귀

- tensorflow

- 게임

- 파이썬

- python

- Regression

Archives

- Today

- Total

코딩걸음마

[추천 시스템(RS)] Wide & Deep Learning for Recommender System 본문

딥러닝 템플릿/추천시스템(RS) 코드

[추천 시스템(RS)] Wide & Deep Learning for Recommender System

코딩걸음마 2022. 7. 20. 01:03728x90

0. 라이브러리 설치 및 불러오기

!pip install pytorch-widedeepimport os

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

1. 데이터 불러오기 및 가공

사용할 Dataset은 KMRD Dataset을 사용하였다.

GitHub - lovit/kmrd: Synthetic dataset for recommender system created from Naver Movie rating system

Synthetic dataset for recommender system created from Naver Movie rating system - GitHub - lovit/kmrd: Synthetic dataset for recommender system created from Naver Movie rating system

github.com

data_path = "파일 경로"

%cd $data_path

if not os.path.exists(data_path):

!git clone https://github.com/lovit/kmrd

!python setup.py install

else:

print("data and path already exists!")

path = data_path + '파일경로2'df = pd.read_csv(os.path.join(path,'rates.csv'))

train_df, val_df = train_test_split(df, test_size=0.2, random_state=1234, shuffle=True)데이터 가공

# Load all related dataframe

movies_df = pd.read_csv(os.path.join(path, 'movies.txt'), sep='\t', encoding='utf-8')

movies_df = movies_df.set_index('movie')

castings_df = pd.read_csv(os.path.join(path, 'castings.csv'), encoding='utf-8')

countries_df = pd.read_csv(os.path.join(path, 'countries.csv'), encoding='utf-8')

genres_df = pd.read_csv(os.path.join(path, 'genres.csv'), encoding='utf-8')

# Get genre information

genres = [(list(set(x['movie'].values))[0], '/'.join(x['genre'].values)) for index, x in genres_df.groupby('movie')]

combined_genres_df = pd.DataFrame(data=genres, columns=['movie', 'genres'])

combined_genres_df = combined_genres_df.set_index('movie')

# Get castings information

castings = [(list(set(x['movie'].values))[0], x['people'].values) for index, x in castings_df.groupby('movie')]

combined_castings_df = pd.DataFrame(data=castings, columns=['movie','people'])

combined_castings_df = combined_castings_df.set_index('movie')

# Get countries for movie information

countries = [(list(set(x['movie'].values))[0], ','.join(x['country'].values)) for index, x in countries_df.groupby('movie')]

combined_countries_df = pd.DataFrame(data=countries, columns=['movie', 'country'])

combined_countries_df = combined_countries_df.set_index('movie')

movies_df = pd.concat([movies_df, combined_genres_df, combined_castings_df, combined_countries_df], axis=1)

print(movies_df.shape)

print(movies_df.head())

dummy_genres_df = movies_df['genres'].str.get_dummies(sep='/')

train_genres_df = train_df['movie'].apply(lambda x: dummy_genres_df.loc[x])

train_genres_df.head()

dummy_grade_df = pd.get_dummies(movies_df['grade'], prefix='grade')

train_grade_df = train_df['movie'].apply(lambda x: dummy_grade_df.loc[x])

train_grade_df.head()

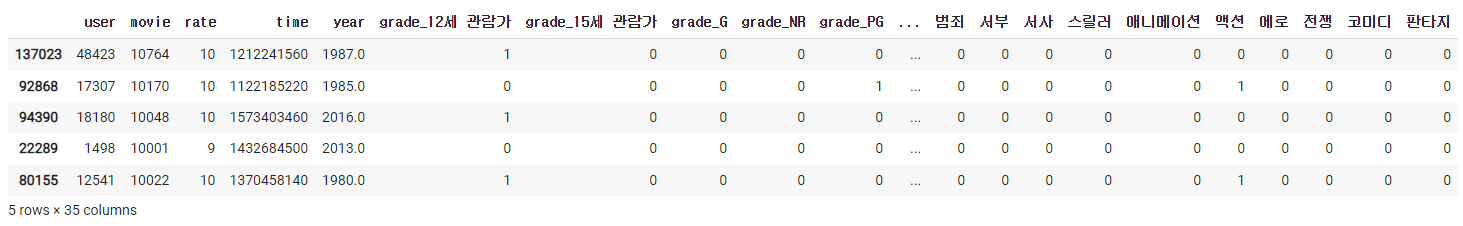

train_df['year'] = train_df.apply(lambda x: movies_df.loc[x['movie']]['year'], axis=1)

train_df = pd.concat([train_df, train_grade_df, train_genres_df], axis=1)

train_df.head()

wide_cols = list(dummy_genres_df.columns) + list(dummy_grade_df.columns)

wide_colsOut

['SF',

'가족',

'공포',

'느와르',

'다큐멘터리',

'드라마',

'로맨스',

'멜로',

'모험',

'뮤지컬',

'미스터리',

'범죄',

'서부',

'서사',

'스릴러',

'애니메이션',

'액션',

'에로',

'전쟁',

'코미디',

'판타지',

'grade_12세 관람가',

'grade_15세 관람가',

'grade_G',

'grade_NR',

'grade_PG',

'grade_PG-13',

'grade_R',

'grade_전체 관람가',

'grade_청소년 관람불가']

장르 조합 생성

import itertools

from itertools import product

unique_combinations = list(list(zip(wide_cols, element))

for element in product(wide_cols, repeat = len(wide_cols)))

print(unique_combinations)

cross_cols = [item for sublist in unique_combinations for item in sublist]

cross_cols = [x for x in cross_cols if x[0] != x[1]]

cross_cols = list(set(cross_cols))

print(cross_cols)

위 방법으로 5개 장르에 대해서만 조합을 구해보면

print(len(wide_cols))

print(wide_cols)

wide_cols = wide_cols[:5]20개의 조합이 나온다.

# embed_cols = [('genre', 16),('grade', 16)]

embed_cols = list(set([(x[0], 16) for x in cross_cols]))

continuous_cols = ['year']

print(embed_cols)

print(continuous_cols)

Wide & Deep

from pytorch_widedeep.preprocessing import WidePreprocessor, DensePreprocessor

from pytorch_widedeep.models import Wide, DeepDense, WideDeep

from pytorch_widedeep.metrics import AccuracyWide Component

preprocess_wide = WidePreprocessor(wide_cols=wide_cols, crossed_cols=cross_cols)

X_wide = preprocess_wide.fit_transform(train_df)

wide = Wide(wide_dim=np.unique(X_wide).shape[0], pred_dim=1)Deep Component

preprocess_deep = DensePreprocessor(embed_cols=embed_cols, continuous_cols=continuous_cols)

X_deep = preprocess_deep.fit_transform(train_df)

deepdense = DeepDense(

hidden_layers=[64, 32],

deep_column_idx=preprocess_deep.deep_column_idx,

embed_input=preprocess_deep.embeddings_input,

continuous_cols=continuous_cols,

)Build and Train

# build, compile and fit

model = WideDeep(wide=wide, deepdense=deepdense)

model.compile(method="binary", metrics=[Accuracy])

model.fit(

X_wide=X_wide,

X_deep=X_deep,

target=target,

n_epochs=5,

batch_size=256,

val_split=0.1,

)728x90

'딥러닝 템플릿 > 추천시스템(RS) 코드' 카테고리의 다른 글

| [추천 시스템(RS)] AutoEncoder Meet Collaborative Filtering (0) | 2022.07.21 |

|---|---|

| [추천 시스템(RS)] DeepFM Frame (0) | 2022.07.21 |

| [추천 시스템(RS)] Factorization Machine (0) | 2022.07.19 |

| [추천 시스템(RS)] Neural Collaborative Filtering (0) | 2022.07.19 |

| [추천 시스템(RS)] Matrix Factorization (0) | 2022.07.19 |

Comments